Apple’s Vertical Integration

When Apple was preparing a metal case for the MacBook, Apple's chief designer Jony Ive thinks there is a huge problem with metal: It doesn't transmit light. That means adding a light to the MacBook, such as a laptop breathing light, would require more holes in the metal, but Ive thinks too many holes would be unsightly.

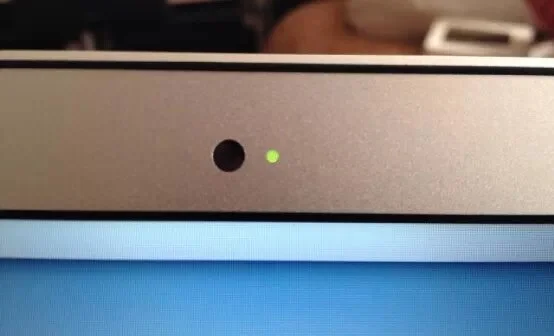

According to legend, Ive put together a team to work specifically on this problem. They discovered that tiny holes drilled by lasers into aluminum metal could allow light to come through -- and no one could see the holes. Apple didn't have the ability to do this on its own at the time, but market research revealed that a company in the United States had lasers capable of punching holes 20 μm in diameter at a cost of $250,000. So Apple bought the company.

Just to let aluminum metal can be transparent and take into account the beauty of this one thing, there is such a big move. Although the technology is no longer rare, the legacy of that acquisition can still be seen on devices like Apple's TrackPad.

Apple doesn't do this kind of thing once or twice: chip, display (LTPO, microLED), sensor (Face ID / Touch ID), brake (Taptic) (Engine) and other technologies, is a word against the acquisition. Like just for Face on the iPhone X ID, Apple for this acquisition of related enterprises at least include 3D sensing technology enterprise PrimeSense, camera module manufacturer LinX, AR company Vrvana, image sensor supplier InVisage Tech, AR eyewear manufacturer Akonia Holographics and others.

Apple now makes (or co-manufactures) its own chips and components, unlike its upstream suppliers, in that they are not for sale, but for its own consumer end products. One of the big differences between Apple's thinking and that of regular consumer electronics manufacturers is that there are functional / performance requirements first and then trying to figure out a solution - if you can't find one, try to build it yourself or help upstream manufacturers build it together. This line of thinking seems to have been handed down from Apple's earliest days.

EE Times USA has written an article in 2018 entitled Apple Goes Vertical & Why It The Matters article describes how Apple can acquire, invest, build its own team, and cooperate hard and soft to achieve a particular feature. Like Face. ID, not only to acquire a bunch of related image sensors, optical technology enterprises, but also to add the Neural Engine module in the chip, and to cooperate with the previous Secure Enclave, and support is most rapid at the system and software level.

Google predates Android Era 4.0 (2012) has done face recognition features in the operating system level, but both from the optical and sensor recognition level, Or chip TEE environment, system implementation, are far from the user experience ready - Google as an operating system supplier, does not have Apple's vertical integration capabilities, natural experience and security discount. This is a typical example. What's more scary is that Apple usually builds a technology that is mature and then can fire up the technology and even the chain, structured light and 3D. ToF has this component (although it often fails, such as 3D touch).