Artificial Intelligence and Machine Learning

By Gino Masini, Yin Gao, Sasha Sirotkin

5G brings more stringent requirements for Key Performance Indicators (KPIs) like latency, reliability, user experience, and others; jointly optimizing those KPIs is becoming more challenging due to the increased complexity of foreseen deployments. Operators and vendors are now turning their attention to Artificial Intelligence and Machine Learning (AI/ML) to address this challenge. For this reason, following RAN plenary approval, 3GPP RAN3 has recently started a new Release-17 study on the applications of AI/ML to RAN.

AI can be broadly defined as getting computers to perform tasks regarded as uniquely human. ML is one category of AI techniques: a large and somewhat loosely defined area of computer algorithms able to automatically improve their performance without explicit programming. AI algorithms were first conceived circa 1950, but only in recent years ML has become very popular partly due to massive advancements in computational power and to the possibility to store vast amounts of data. ML techniques have made tremendous progress in fields such as computer vision, natural language processing, and others.

ML algorithms can be divided into the following types:

-Supervised learning: given a training labeled data and desired output, the algorithms produce a function which can be used to predict the output. In other words, supervised learning algorithms infer a generalized rule that maps inputs to outputs. Most Deep Learning approaches are also based on supervised learning.

-Unsupervised learning: given some training data without pre-existing labels, the algorithms can search for patterns to uncover useful information.

-Reinforcement learning (RL): unlike the other types, which include a training phase (typically performed offline) and an inference phase (typically performed in “real time”), this approach is based on “real-time” interaction between an agent and the environment. The agent performs a certain action changing the state of the system, which leads to a “reward” or a “penalty”.

Perhaps the most obvious candidate for AI/ML in RAN is Self-Organizing Networks (SON) functionality, currently part of LTE and NR specifications (it was initially introduced in Rel-8 for LTE). With SON, the network self-adjusts and fine-tunes a range of parameters according to the different radio and traffic conditions, alleviating the burden of manual optimization for the operator. While the algorithms behind SON functions are not standardized in 3GPP, SON implementations are typically rule-based. One of the main differences between SON and an AI-based approach is the switch from a reactive paradigm to a proactive one.

The study has just begun, and at the time of writing we can only provide initial considerations. According to the mandate received from RAN, our study focuses on the functionality and the corresponding types of inputs and outputs (massive data collected from RAN, core network, and terminals), and on potential impacts on existing nodes and interfaces; the detailed AI/ML algorithms are out of RAN3 scope. Within the RAN architecture defined in RAN3, this study prioritizes NG-RAN, including EN-DC. In terms of use cases, the group has agreed to start with energy saving, load balancing, and mobility optimization. Although the importance of avoiding a duplication of SON was recognized, additional use cases may be discussed as the study progresses, according to companies’ contributions. The aim is to define a framework for AI/ML within the current NG-RAN architecture, and the AI/ML workflow being discussed should not prevent “thinking beyond”, if a use case requires so.

Stay tuned for further updates as the study progresses in RAN3, or consider joining us in our journey into the “uncharted” territory of AI/ML in NG-RAN.

Electricity load forecasting: a systematic review

Isaac Kofi Nti, Moses Teimeh, […]Adebayo Felix Adekoya

Journal of Electrical Systems and Information Technology

Abstract

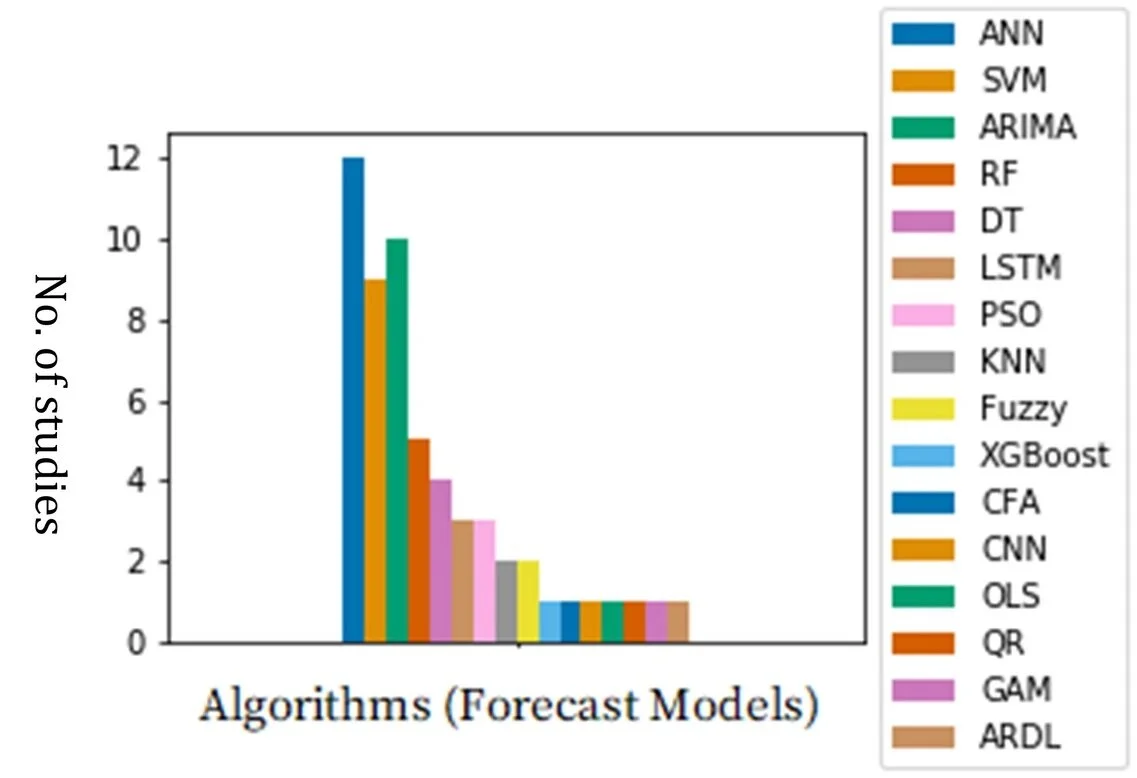

The economic growth of every nation is highly related to its electricity infrastructure, network, and availability since electricity has become the central part of everyday life in this modern world. Hence, the global demand for electricity for residential and commercial purposes has seen an incredible increase. On the other side, electricity prices keep fluctuating over the past years and not mentioning the inadequacy in electricity generation to meet global demand. As a solution to this, numerous studies aimed at estimating future electrical energy demand for residential and commercial purposes to enable electricity generators, distributors, and suppliers to plan effectively ahead and promote energy conservation among the users. Notwithstanding, load forecasting is one of the major problems facing the power industry since the inception of electric power. The current study tried to undertake a systematic and critical review of about seventy-seven (77) relevant previous works reported in academic journals over nine years (2010–2020) in electricity demand forecasting. Specifically, attention was given to the following themes: (i) The forecasting algorithms used and their fitting ability in this field, (ii) the theories and factors affecting electricity consumption and the origin of research work, (iii) the relevant accuracy and error metrics applied in electricity load forecasting, and (iv) the forecasting period. The results revealed that 90% out of the top nine models used in electricity forecasting was artificial intelligence based, with artificial neural network (ANN) representing 28%. In this scope, ANN models were primarily used for short-term electricity forecasting where electrical energy consumption patterns are complicated. Concerning the accuracy metrics used, it was observed that root-mean-square error (RMSE) (38%) was the most used error metric among electricity forecasters, followed by mean absolute percentage error MAPE (35%). The study further revealed that 50% of electricity demand forecasting was based on weather and economic parameters, 8.33% on household lifestyle, 38.33% on historical energy consumption, and 3.33% on stock indices. Finally, we recap the challenges and opportunities for further research in electricity load forecasting locally and globally.

Background

Electricity is the pivot in upholding highly technologically advanced industrialisation in every economy [1,2,3]. Almost every activity done in this modern era hinges on electricity. The demand and usage of electric energy increase globally as the years past [4]; however, the process of generating, transmitting, and distributing electrical energy remains complicated and costly. Hence, effective grid management is an essential role in reducing the cost of energy production and increased in generating the capacity to meet the growing demand in electric energy [5].

Accordingly, effective grid management involves proper load demand planning, adequate maintenance schedule for generating, transmission and distribution lines, and efficient load distribution through the supply lines. Therefore, an accurate load forecasting will go a long way to maximise the efficiency of the planning process in the power generation industries [5, 6]. As a means to improve the accuracy of Electrical Energy Demand (EED) forecasting, several computational and statistical techniques have been applied to enhance forecast models [7].

EED forecasting techniques can be clustered into three (3), namely correlation, extrapolation, and a combination of both. The Extrapolation techniques (Trend analysis) involve fitting trend curves to primary historical data of electrical energy demand in a way to mirror the growth trend itself [7, 8]. Here, the future value of electricity demand is obtained from estimating the trend curve function at the preferred future point. Despite its simplicity, its results are very realistic in some instances [8].

On the other hand, correlation techniques (End-use and Economic models) involve relating the system load to several economic and demographic factors [7, 8]. Thus, the techniques ensure that the analysts capture the association existing between load increase patterns and other measurable factors. However, the disadvantage lies in the forecasting of economic and demographic factors, which is more complicated than the load forecast itself [7, 8]. Usually, economic and demographic factors such as population, building permits, heating, employment, ventilation, air conditioning system information, weather data, building structure, and business are used in correlation techniques [7,8,9]. Nevertheless, some researchers group EED forecasting models into two, viz. data-driven (artificial intelligence) methods (same as the extrapolation techniques) and engineering methods (same as correlation the techniques) [9]. All the same, no single method is accepted scientifically superior in all situations.

Also, proper planning and useful applications of electric load forecasting require particular “forecasting intervals,” also referred to as “lead time”. Based on the lead time, load forecasting can be grouped into four (4), namely: very short-term load forecasting (VSTLF), short-term load forecasting (STLF), medium-term load forecasting (MTLF) and long-term load forecasting (LTLF) [6, 7, 10]. The VSTLF is applicable in real-time control, and its predicting period is within minutes to 1 h ahead. The STLF is for making forecasting within 1 h to 7 days or month ahead [11]. It is usually used for the day-to-day operations of the utility industry, such as scheduling the generation and transmission of electric energy. The MTLF is used for forecasting of fuel purchase, maintenance, utility assessments. Its forecasting period ranges from 1 week to 1 year. While the LTLF is for making forecasting beyond a year to 20 years ahead, it is suitable for forecasting the construction of new generations, strategic planning, and changes in the electric energy supply and delivery system [10].

Notwithstanding the above-mentioned techniques and approaches available, EED forecasting is seen to be complicated and cannot easily be solved with simple mathematical formulas [2]. Also, Hong and Fan [12] pointed out that electric load forecasting has been a primary problem for the electric power industries, since the inception of the electric power. Regardless of the difficulty in electric load forecasting, the optimal and proficient economic set-up of electric power systems has continually occupied a vital position in the electric power industries [13]. This exercise permits the utility industries to examine the dynamic growth in load demand patterns to facilitate continuity planning for a better and accurate power system expansion. Consequently, inaccurate prediction leads to power shortage, which can lead to “dumsor” and unneeded development in the power system leading to unwanted expenditure [7, 14]. Besides, a robust EED forecasting is essential in developing countries having a low rate of electrification to facilitate a way for supporting the active development of the power systems [15].

Based on the sensitive nature of electricity demand forecasting in the power industries, there is a need for researchers and professionals to identify the challenges and opportunities in this area. Besides, as argued by Moher et al. [16], systematic reviews are the established reference for generating evidence in any research field for further studies. Our partial search of literature resulted in the following [10, 12, 17,18,19,20,21] papers that focused on comprehensive systematic review concerning the methods, models, and several methodologies used in electric load forecasting. Hammad et al. [10] compared forty-five (45) academic papers on electric load forecasting based on inputs, outputs, time frame, the scale of the project, and value. They revealed that despite the simplicity of regression models, they are mostly useful for long-term load forecasting compared with AI-based models such as ANN, Fuzzy logic, and SVM, which are appropriate for short-term forecasting.

Similarly, Hong and Fan [12] carried out a tutorial review of probabilistic EED forecasting. The paper focused on EED forecasting methodologies, special techniques, common misunderstandings and evaluation methods. Wang et al. [19] presented a comprehensive review of factors that affects EED forecasting, such as forecast model, evaluation metric, and input parameters. The paper reported that the commonly used evaluation metrics were the mean absolute error, MAPE, and RMSE. Likewise, Kuster et al. [22] presented a systematic review of 113 studies in electricity forecasting. The paper examined the timeframe, inputs, outputs, data sample size, scale, error type as criteria for comparing models aimed at identifying which model best suited for a case or scenario.

Also, Zhou et al. [17] presented a review of electric load classification in the smart-grid environment. The paper focused on the commonly used clustering techniques and well-known evaluation methods for EED forecasting. Another study in [21] presented a review of short-term EED forecasting based on artificial intelligence. Mele [20] presented an overview of the primary machine learning techniques used for furcating short-term EED. Gonzalez-Briones et al. [18] examined the critical machine learning models for EED forecasting using a 1-year dataset of a shoe store. Panda et al. [23] presented a comprehensive review of LTLF studies examining the various techniques and approaches adopted in LTLF.

The above-discussed works of literature show that two studies [20, 21] address a comprehensive review on STLF, [23] addresses forecasting models based on LTLF. The study in [24] was entirely dedicated to STLF. Only a fraction (10%) of above systematic review studies included STLF, MTLF and LTLF papers in their review; however, as argued in [10], the lead time (forecasting interval) is a factor that positively influences the performance of a chosen model for EED forecasting studies. Again, a high percentage of these studies [10, 12, 17,18,19,20,21,22, 24] concentrated on the methods (models), input parameter, and timeframe. Nevertheless, Wang et al. [19] revealed that the primary factors that influence EED forecasting models are property (characteristic) parameters of the building and weather parameters include. Besides, these parameters are territorial dependant and cultural bond. Thus, the weather pattern is not the same world-wide neither do we use the same building architecture and materials globally.

Notwithstanding, a higher percentage of previous systematic review studies overlooked the origin of studies and dataset of EED forecasting paper. Also, only a few studies [12, 17, 19] that examined the evaluation metrics used in EED forecasting models. However, as pointed out in [17], there is no single validity index that can correctly deal with any dataset and offer better performance always.

Despite all these review studies [10, 12, 17,18,19,20,21,22, 24] on electricity load forecasting, a comprehensive systematic review of electricity load forecasting that takes into account all possible factors, such as the forecasting load (commercial, residential and combined), the forecast model (conventional and AI), model evaluation metrics and forecasting type (STLF, MTLF, and LTLF) that influences EED forecast models is still an open gate for research. Hence, to fill in the gap, this study presents an extensive systematic review of state-of-the-art literature based on electrical energy demand forecasting. The current review is classified according to the forecasting load (commercial, residential, and combined), the forecast model (conventional, AI and hybrids), model evaluation metrics, and forecasting type (STLF, MTLF, and LTLF). The Preferred-Reporting Items for Systematic-Review and Meta-Analysis (PRISMA) flow diagram was adopted for this study based on its ability to advance the value and quality of the systematic review as compared with other guidelines

……

most used algorithms for electricity forecasting

Conclusions

The current study sought to reviewed state-of-the-art literature on electricity load forecasting to identify the challenges and opportunities for future studies. The outcome of the study revealed that electricity load forecasting is seen to be complicated for both engineers and academician and is still an ongoing area of research. The key findings are summarised as follows.

1.

Several studies (90%) have applied AI in electrical energy demand forecasting as compared with traditional engineering and statistical method (10%) to address energy prediction problems; however, there are not enough studies benchmarking the performance of these methods.

2.

There are few studies on EED forecasting in Africa countries (12 out of 67). Though the continent has progressive achievement in the creation of Regional Power Pools (PPP) over the last two decades, the continent still suffers from a lousy power network in most of its countries, leaving millions of people in Africa without electricity.

3.

Temperature and rainfall as an input parameter to the EED forecasting model are seen to have a divergent view. At the same time, some sections of research recorded an improvement in accuracy and reported no improvement in accuracy when introduced and input. However, the current study attributes this to the difference in automorphic temperature globally and the different economic status among countries. An additional investigation will bring more clarity to the literature.

4.

This study revealed that EED forecasting in the residential sector had seen little attention. On the other hand, Guo et al. [78] argue that the basic unit of electricity consumption is home.

5.

It was observed that there had been a global increase in residential electricity demand, this according to the report in [49] can be attributed to the growing rate of buying electrical equipment and appliances of low quality due to higher living standards. However, a further probe into Soares et al. [49] assertion will bring clarity to literature because of the discrepancy in opinions in literature.

6.

Lastly, the study revealed that there is a limited number of studies on load forecasting studies in Ghana. We, therefore, recommend rigorous researchers in this field in the country to enhance the economic growth of the country.

Our future study will focus on identifying the relationship between household lifestyle factors and electricity consumption in Ghana and predict load consumption based on identified factors since it is an area that has seen little or no attention in Ghana.

Current state of communication systems based on electrical power transmission lines

Antony Ndolo & İsmail Hakkı Çavdar

Journal of Electrical Systems and Information Technology

Abstract

Power line communication technology is a retrofit alternative technology for last mile information technology. Despite several challenges, such as inadequate standards and electromagnetic compatibility, it is maturing. In this review, we have analysed these obstacles and its current application status.

Introduction

Indeed, advancements in communication engineering and technology have brought in revolution in the telecommunication industry. One great impact has been in information and service delivery during the last decades of the twentieth century to date. This is due to the high demand for information created by the huge human population. Better methods and channel models for signal transmission have been researched and developed. For instance, fibre optics has provided waveguide for numerous services at higher speed while inheriting other advantages such immunity to electromagnetic interferences amongst others [1, 2]. Despite all the positive attractions towards fibre communication, it is expensive to install and it is limited to certain areas. That is, remote, rural and mountainous areas. This has necessitated the search for alternative information transmission methods. Power line communication (PLC) is one such alternative.

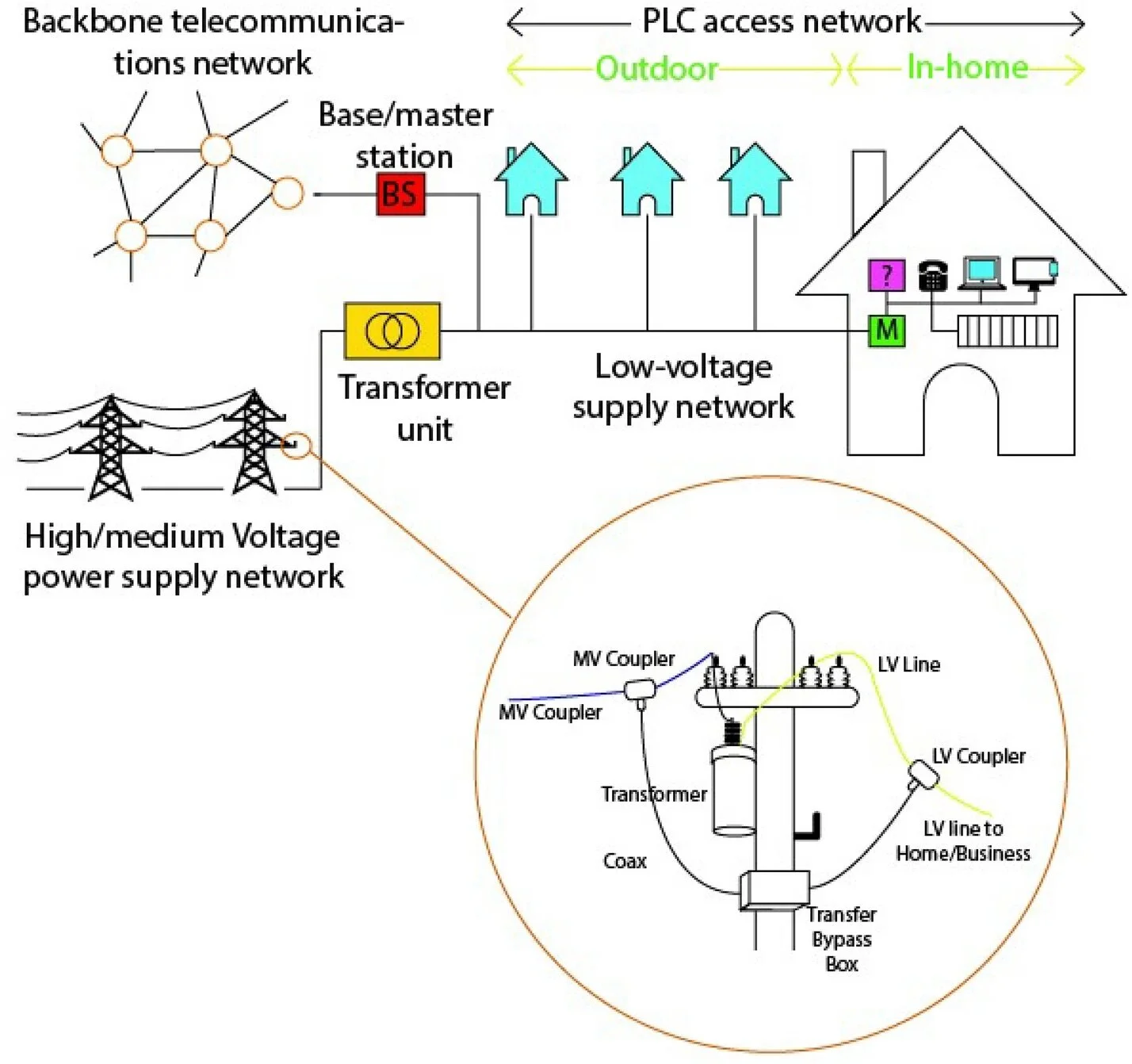

Power line communication technology is the basically a technology that uses pre-existing and installed electrical power cables for transmission of information [3,4,5,6]. Traditionally, such electrical lines were designed exclusively for distribution and transmission of electricity at lower frequency. This frequency varies from country to country, mainly, 50 Hz or 60 Hz. Upon generation of electricity, it is distributed and transmitted through different voltage network. Firstly, electricity is transmitted over high voltage lines, then distribution is done over medium voltage lines, and lastly, it is converted/scaled down using transformers for the end-user consumption in the low-voltage lines. Figure 1 gives summary of PLC structure. This technology is therefore retrofit and economically cheaper compared to other methods. There is no need for new cable installations. Secondly, electrical power network is the most developed, covers large areas and reaches many homesteads. At distribution lines, they are majorly used for the control signals, remote data acquisition and IP telephony services [7].

Structure of power line communication network

Power line communication is divided into three categories, namely ultra-narrowband, narrowband and broadband as summarised in Table 1. The first two are commonly grouped together and termed as narrowband PLC. We will characterise these categories in the next section in details.

Read more

Can vitamin C lower uric acid? A collection of popular medication questions!

Dingxiang Garden

1. Can vitamin C lower uric acid?

Yes.

Relevant literature shows that "vitamin C can reduce uric acid." The mechanism of action is: Vitamin C antioxidant effect can expand into glomerular arteriole, increase renal blood flow, thus increase glomerular filtration rate; Competition for tubular reabsorption.

2. What medications can be used to treat obesity?

Ciprofloxacin (naltrexone) / bupropion, lorcaserin, phentamine / topiramate, orlistat, liraglutide.

Currently, The main drugs approved by the United States Food and Drug Administration (FDA) to treat obesity are ciprofloxacin (naltrexone) / bupropion, lorcaserin, phentermine / topiramate, orlistat, and liraglutide.

But orlistat is the only drug approved in China with an indication for treating obesity. Orlistat for the treatment of obese and overweight (BMI ≥ 24 kg / m2) adults aged 18 years and over[1]。

3. How to choose anti-osteoporosis drugs?

Sub-situation selection.

Oral medications such as alendronate should be preferred in patients with low to moderate fracture risk. In elderly patients with oral intolerance, poor compliance and high fracture risk, such as multiple vertebral fractures or hip fractures, injections such as zoledronic acid and teripratide may be considered; Estrogen or selective estrogen receptor modulator such as raloxifene may be considered in patients with only a high risk of vertebral fracture but not hip and non-vertebral fracture. Short-term use of calcitonin may be considered in patients with new fracture and pain[2]。

4. What is the optimal route of administration of dopamine?

Administration via central venous catheter.

Dopamine is preferably administered via central venous catheter to eliminate the risk of drug extravasation. In the absence of a central venous catheter, bulky veins should be used for intravenous (intravenous) or intravenous drip (intravenous drip), while preventing drug extravasation[3]。

5. What is the preferred fluid for acute pancreatitis rehydration?

Isotonic crystal liquid.

Extracellular solutions (Ringer's Lactate, etc.) may be associated with anti-inflammatory effects, but evidence based on randomised trials is insufficient to demonstrate that Rlinger's lactate is superior to normal saline. Artificial colloids such as hydroxyethyl starch (HES) are not recommended due to increased risk of organ failure[4-6]. While correcting blood potassium levels.

Mother-in-law said I hurt a family of three generations: a hepatitis B mother's two births

Dingxiang Garden

Infected

The liver is a "silent" organ, and liver tissue without sensory endings cannot report its pain. In most cases, hepatitis B does not rapidly attack.The virus lingers in people for a decade or two, and if not told, the infected person won't even realize they have hepatitis B.

Gu Xia was diagnosed at the age of 14.

In the late summer of 1999, Gu's brother's school conducted a large-scale screening for hepatitis B infection. After the results came out, the school called, the other tone solemn, informed his brother infected with hepatitis B virus, and suggested that the whole family "go to screening again."

Also diagnosed were two small children from the next village. Three families packed a car to the CDC in the county, and the results came out that night -Gu Xia and the mother of another child were confirmed to have hepatitis B virus.

"How did you get it? "This is the hepatitis B virus infected people will be aware of the results after the repeated exploration of a problem. Gu Xia is no exception.

There are two things she can relate to.

At the age of seven, Gu fell ill with yellow skin, yellow urine, fever, weakness and an aversion to oil - considered a sign of liver disease locally. In the early 1990s, few people in Gu Xia's village would go to the city to seek medical treatment. "Children, whether they have hepatitis or smallpox, are looking for barefoot doctors, folk remedies and home treatment. ”

Grandpa punted across the river, took Gu Xia to find a local old man, more than sixty years old, dark and rough skin, not much. Farming is his main business, the local spread, the old family spread for generations to cure liver disease folk prescription.

The other eyelid lift, looked at the girl's face, "liver disease is right," gave her "a lump of black medicine mud," home rub into broad beans big pills, two pills a day.

"Now that I think about it, some of the acute symptoms may have disappeared, but I didn't go to the hospital for a check-up. Hepatitis may have been there ever since. ”

There are also risks from sharing needles.

Gu Xia, who has been in poor health since childhood, is a frequent visitor to the doctor's home. There was an aluminum lunch box on the doctor's desk, with an alcohol lamp under it, and a limited number of needles sunk into the bottom of the box.

"When I have a cold, go for an injection. When you're done, pull the needle off and put it back in the lunch box. When it's hot, it's sterilized. Change one of several needles from inside to the child behind, then change another. ”

But for Gu Xia, the "iatrogenic infection virus" speculation is not the most frightening. What is frightening is that "you may become the source of infection." She has a secret and deep guilt that she "may have caused her brother and other children to contract hepatitis B."

The introspection or condemnation of morality caused by the virus is not unique to Gu. Hepatitis B infection is considered to be the fault of the infected person, just as alcoholics are prone to esophageal cancer and smokers to lung cancer.People speculated that hepatitis B patients must have "done something unhealthy" to "attract such punishment."

A "post-80s" girl once mentioned that after being told by her school that she was infected with hepatitis B virus, her parents' first reaction was to accuse her of "eating out."This misperception still exists today.

Although the WHO document states: "Hepatitis B virus is not transmitted by sharing utensils or cooking utensils with an infected person, breastfeeding, hugging, kissing, coughing or sneezing by an infected mother. ”

Yet for hepatitis B, the rumors never stop.

From the early years, when laws and regulations imposed an unwarranted occupational ban on hepatitis B carriers, to the public's fantasies about pollution caused by the term, HBV carriers have to carefully hide their identity.Accordingly, they are deprived of many choices by life.

At the age of 15, Gu Xia graduated from junior high school did not continue to study, she wanted to work in a factory, relatives opened the food factory, medical examination was brushed down. "How many paths can a man like me, who hasn't studied much, choose? Either into the factory, or catering, but both tragedy. ”

Gu Xia eventually entered the beauty salon industry, "is the lowest threshold of admission." She started with the front cashier, trying to avoid direct contact with customers.

Before 2018, she worked outside, as far as possible choose to live alone, "can not give others trouble."

Birth

"Can it be cured? ”

"There is no cure for this disease. The cure is spending money for nothing. No amount of money can be spent on it. We can't get rid of the root. ”

In the four years since her diagnosis, Gu Xia has not given up, and the above conversations have occurred many times between her doctors and her.

The family does not give up, asking around may be "completely cured" of the folk prescription.

The first prescription left a lasting mark on Gu Xia and his brother. Uncle pulled a few herbs from the ridge of the field, mashed them, soaked them with soju and applied them to the wrists of the siblings. "Men left and women right, apply morning and night, flow pus water, disease is fine. ”

The siblings did not make it through the night, suffering burns, blistering, yellow water on their wrists and "rolling around in bed in pain."

Twenty years later, Gu Xia still has scars on the inside of her wrist from that year's injury."Reach out, people ask, I said when I was a child hot. ”

Gu Xia's wrist is white after the scab wound

Having suffered, Gu Xia did not stop there. She has tasted bitter soup and swallowed meatballs made of pig bile and flour. The inspection data given by the hospital is not always what she wants, "or big Sanyang."

Unable to get the desired result, Gu gave up completely, did not go to the hospital, no longer treated, pretending to go back to before the diagnosis - when she had not lost her "normal" status. For her, it was "a way to survive."

But there was one problem she could never get around - marriage and childbirth.

When she was 20 years old, Gu Xia and her partner of two years were about to enter a marriage. She confessed to each other. After consulting with her parents, her boyfriend sent her a reply:

"Our family would rather have an ugly daughter-in-law than an unhealthy one."

"I was particularly hurt by his attitude and at that time I had thought about it for a long time and thought it (the confession) was wrong and should have kept it from me. ”

Three years later, Gu Xia with a secret into the first marriage, the other side did not mention the pre-marital examination, she also avoided.

In 2009, she and her husband "drift north" to work together, pregnant.

The secret is still not well hidden. When she was six months pregnant, her husband suffered motion sickness and vomiting on his way to work. The doctor diagnosed acute hepatitis B virus infection and asked her family to check it out.

"I can't hide it. In front of his family, the doctor took my results and said, "You're obviously not infected right now." ”

When the husband and his family knew, their faces changed. Originally in and out will accompany her mother-in-law, since then never asked Gu Xia's body.

Doctors advised her to undergo "mother-to-child blocking," the first time Gu had heard of the term.

"At that time, I was looking for the director of a hospital in Beijing, because there was no file in the hospital, Is the field again, the doctor does not receive, also does not give cure, gave a suggestion, 'pregnant 7, 8, 9 three months continuously play hepatitis B immunoglobulin'. "The blocking rate is about 98 percent," the doctor told Gu.

Zhang Qingying, chief physician of the Department of Obstetrics and Gynecology Hospital of Fudan University in Shanghai, has 30 years of clinical experience. In her memory, "Mothers with hepatitis B virus use hepatitis b immunoglobulin in the third trimester of pregnancy" has been vigorously promoted.

But "in recent years, more clinical studies have not proved that it can improve the rate of maternal and infant blocking," "it simply can not play a blocking role, no inhibition of the virus, no protective effect on children. Then I didn't have to. ”

All that has happened since has confirmed this statement. But for Gu at the time, it was a lifeline, carrying a 50% chance. So long as the children are healthy, I shall have something to say about the family, and my marriage shall be preserved. ”

She returned to Anhui and begged doctors at the county's maternal and child health care hospital to inject herself with hepatitis B immunoglobulin.

A few months later, the child was born in a private hospital.Medical staff treat Gu Xia, there is no "this is a chronic hepatitis B maternal" such awareness, still according to the routine operation.

Gu Xia's son was born less than 12 hours after being vaccinated against hepatitis B and hepatitis b immunoglobulin. The former had been added to the national category one vaccine in 2005, which is free of charge and compulsory for all newborns. And if the mother carries the hepatitis B virus, the child must also add a dose of hepatitis b immunoglobulin.

There was a ten-month interval between the birth of the child and confirmation of the infection. Every day Gu Xia lives in a struggle. "I am a contradiction. I want to explore what this disease can do." But scared and didn't want to know. I feel that if I hadn't been checked out, my life would have been different, and it wouldn't be as miserable as it is now, "

"Naturally I hope he is healthy, and I don't want him to taste it again for my sins. I am afraid to know if he has hepatitis B, but I have to be responsible for him. ”

Hospital examination results shattered all her expectations, her son is "small three yang." (Note: The success rate of blocking between mother and baby is mainly related to the timeliness of newborn vaccine injection and maternal viral load. The blocking rate of vaccine + immunoglobulin is about 96%. )

When the child was born, her mother forbade Gu Xia from being close to her son. She did not allow lactation and separated dishes and chopsticks. Gu Xia held her son teasing for a while, her mother-in-law came forward to take the child away. "All her actions are saying, 'You're sick, you stay away from the kids, stay away" from us.

After the results of his son's tests, "behavior" became "language."

"She used to shout, 'You have destroyed three generations of our family'. ”

Husband to talk to Gu Xia: "You are sick, The child is also sick, I treat the child to a large amount of money, in case one day you also need such a large sum of money. We are husband and wife, from the moral point of view I should treat you, but I can't afford it. ”

I knew then that if we weren't married you could leave me alone. ”

After that conversation, Gu Xia's marriage ended.

"I explained that the doctor said it was an extinct volcano and that all it needed was to push it down with medicine. How do you know I must need a large sum of money for treatment? But I don't believe you, and I think that's a quibble. ”

Anxiety about the risk of mother-to-child transmission surrounds almost every "expectant mother with hepatitis B." A Sichuan mother will take her child to have a check-up next week. She hasn't slept well for a long time. "If the check-up comes out bad, I can't talk to my husband and mother-in-law. ”

Such feelings of guilt and anxiety permeate Zhang Qingying's clinic. "The baby lives in the mother's womb for ten months," and therefore, people are more strict on women. Also carrying hepatitis B virus, in fertility, compared to women, men show a lot less trouble.

to block

Eight years ago, Zhang Qingying met a woman who was 26 weeks pregnant with cirrhosis. Her hepatitis B virus came from her mother. "In our capacity at the time, this pregnant woman risked her life by continuing her pregnancy. But this mother is very insistent, she said, 'I want this baby if I die'.

The hospital formed a team, doctors in the hepatology department and doctors in obstetrics and gynecology, "Even to the degree of cirrhosis, patients can rely on their own physical ability to get pregnant, indicating that the liver is not bad to that extent, or a glimmer of hope."

For the process of treatment, Zhang Qingying said very simple, "while walking to see," "according to her virus number to judge, see if you need to use antiviral drug treatment, how to use; After the child is born must vaccinate hepatitis B vaccine and hepatitis b immunoglobulin, this is the most important.The mother gave birth at 38 weeks and survived, with her hepatitis B virus completely blocked.

The plan, which is now very mature, was a big challenge for Zhang and her colleagues eight years ago. "At that time, I did not know how much effect there would be, and what effect it would achieve. It was slowly groping, until now it has become a guide and a consensus. Just like new crown pneumonia, the treatment process is constantly trying to find the least risk and the most effective. ”

It was under the guidance of such a plan that Gu Xia gave birth to a healthy daughter. At this point, it had been eight years since she gave birth to her first child.

Before entering her second marriage, she was completely honest about her situation. Her husband accepted her condition, consulted a doctor, was vaccinated against hepatitis B and has not been infected.

On the issue of having children, Gu Xia made a request, "Beijing's medical conditions are good, I want to give birth in Beijing. ”

She has pregnancy tests every half a month, and her mood fluctuates with the rise and fall of her hepatitis B number. "The virus count was once very high, always to the eighth power," the doctor prescribed antiviral drugs, dropped to the sixth power, the next month to the third power, and has remained there ever since.

To reduce intrauterine infection, Gu was asked to replace amniocentesis with a noninvasive prenatal test, which could obtain a "relatively accurate answer" from peripheral venous blood.

After childbirth, the midwife will immediately take the child away, quickly leave the environment contaminated by maternal blood, completely remove the child's body blood, mucus and amniotic fluid, wipe the surface of the umbilical cord will cut it.

These are not the conditions for the birth of a first child, from the pregnancy to the confirmation of her daughter's health, Gu Xia kept thinking of the first birth.

"It would have been nice to have done the same back then. "That thought popped into her head a thousand times.

After his son was diagnosed with "Little Sanyang," doctors in Beijing advised parents to cooperate with treatment with their children. "If they are not well treated in childhood, they will develop into chronic virus carriers in adulthood. ”

But the treatment was stopped after a few years by her former husband, who took the children back to his hometown in Anhui."Big expenses are the main reason, nearly one hundred thousand dollars a year. "The child is also in pain, and the interferon makes his mood bad," even banging his head against a wall. "

More important, is the vision of the people around. "Neighbors know you've gone to the doctor, and parents will tell their children not to play with him."Her ex-husband always felt that "this stigma is not brought by the disease itself, but because of the treatment."

No one spoke to his son about what had happened to him, and the boy, who had become increasingly silent, seemed accustomed to being taken in for regular checkups.

Gu Xia can only be silent, she can't even imagine, sit down and talk with her son. "'Son, it was mother who gave you the virus,' is it? It's hard just to open your mouth. ”

Let him see it first. Me too. ”

Tens of millions of "silent"

Li Bei, 27, showed something possible about the future of Gu Xia's son.

It was only after her birth that the mother was informed that both mother and daughter had contracted hepatitis B. As a minor, she shuttled regularly with her mother between the city's infectious disease hospital and home, but her mother alone remained in the doctor's office following orders.

It wasn't until the college entrance examination that she learned she was a hepatitis B virus carrier.

"Do you blame your mother? ”

"No. She's also a victim, and she doesn't want to. ”

But Libe still felt trapped. She wants to go into a love, want a marriage, but afraid to see each other's eyes mind.

Her mother advised her to hide it. "I can't. This lie must be followed by a series of lies."

It's something medicine can't solve.

In Zhang Qingying's consulting room, someone wants the doctor to erase the history of hepatitis B on the sick leave; There are husbands who wish to keep secret, not to his wife that he is infected with hepatitis B virus, and some people with her husband and doctors to play a good discussion, hidden parents-in-law.

These go far beyond the job of doctors "saving lives."

For her husband, who wants to help hide his hepatitis B, she also carefully balances privacy and health. "Telling your wife to get the vaccine in advance, telling her to make antibodies in her body, can reduce a lot of unnecessary risks. But more we will not say, do not destroy the family. ”

Zhang Qingying will also tell pregnant women who want to hide their in-laws, she can entrust a person to communicate their own situation, such as her husband, "Other people who are not within the scope of authorization, we have no right to inform, the other side has no rights to know." ”

Doctors understand the concerns of people infected with hepatitis B. The easy and effective HBV surface antigen test has singled them out from the crowd, but no one has been able to eliminate the virus completely.

From day one of diagnosis, fear was born.Public misconceptions about the infectiousness and transmission of hepatitis B place people living with the virus in the awkward position of being marginalized and may be forced to abandon their previous thoughts. Thus, silence also from this time began to become one of the protective colors of the society they live in.

Some of them, "take medicine secretly," cover their "go to infectious disease hospital," guilty to write down a "good" in the company's health condition inquiry, even voice is stressed to conceal identity.Apart from the medicine box and the checklist, everything related to "hepatitis B" was stripped from their lives, consciously or unconsciously.

Gu Xia's brother is 33 years old this year, because of the hepatitis B virus, talked about love, but did not follow, has not married. The originally cheerful and lively boy became silent.

"In our group, sometimes it is the patient to find the patient, I think my brother really can not find a like this. ”

But she dare not mention, "'Hepatitis B' is a taboo in the dialogue between siblings, a mention, brother on the flip. Shout loudly, 'What do you know?' ”

Only once, brother with her proposed, want to take nephew from her sister's ex-husband side, "The child is too poor, I come to keep good. ”

With transaminase elevated, can statins continue to use?

Sun Guolong from Ding Xiang Garden

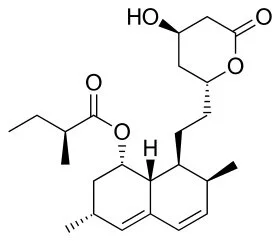

Statins, namely hydroxymethylglutaryl CoA (HMG-CoA) reductase inhibitors, By inhibiting the synthesis of cholesterol in the body, play the role of lipid regulation, but also stable atherosclerosis plaque, anti-inflammatory, improve the stability of vascular endothelial cells and other functions, clinical widely used in coronary heart disease, stroke and other diseases of secondary prevention.

But clinically, many patients with statins have elevated transaminase in the course of treatment, and some patients even develop drug-induced hepatitis. Can statins continue to be used after liver dysfunction? How should the indications and strategies of drug modification be defined

01. What are the characteristics of statin-induced transaminase elevation?

Related data show that all statins may cause elevated transaminase. In approximately 1-2% of all patients receiving statins, elevated liver enzyme levels were more than three times the upper limit of normal.

The increase of transaminase induced by statins occurred in a dose-dependent manner within 3 months after drug initiation, and the liver enzyme level decreased after drug cessation.

At present, most scholars believe that transaminase transient beyond the normal range is the result of organ adaptation to statins and cholesterol reduction, not a sign of liver damage.

02. Elevated transaminase occurs. How to adjust medication?

1. For asymptomatic patients with simple elevated transaminase (transaminases < 3 ULN), there is no need to adjust dosage or discontinue treatment;

2. If AST and / or ALT ≥ 3 ULN during the course of administration, the drug should be stopped or reduced, and liver function should be reviewed weekly until normal;

3. Patients with elevated transaminase accompanied by large liver, jaundice, elevated direct bilirubin or prolonged coagulation time should be considered for withdrawal;

4. Patients with mildly impaired liver function with nonalcoholic fatty liver (NAFLD), hepatitis B (HBV), and hepatitis C (HCV) and compensatory cirrhosis, It is safe to use statins. Liver function monitoring should be strengthened in patients with HBV and compensated cirrhosis.

5. Statins are prohibited in patients with active liver disease, decompensated cirrhosis and acute liver failure;

6. Rosuvastatin is hydrophilic, 90% of which is excreted through the kidney in its original form. It should be used in patients with liver dysfunction.

7. Atorvastatin, simvastatin and other mainly through the liver metabolism after clearance by bile, renal dysfunction should be used.

03. Or Alternative Medicine

1. Ezekimeb

As a cholesterol absorption inhibitor, ezetimibe is the second-line drug of choice when current statin intolerance or treatment with statins alone is substandard.

In clinical controlled studies with ezetimibe alone, the incidence of elevated transaminase (ALT / AST ≥ 3 ULN) was similar to that of placebo.

For patients with mild hepatic insufficiency (Child-Pugh score 5-6), there is no need to adjust the dosage. Ezetimibe is not recommended for patients with moderate to severe liver dysfunction.

2. Bile Acid Chelators

As basic anion exchange resin, it binds with bile acid in the small intestine to prevent its reabsorption, and promotes cholesterol synthesis of bile acids in the liver, thereby increasing the activity of liver LDL-C receptor and removing LDL-c from plasma.

Common adverse reactions include gastrointestinal discomfort, constipation and affecting the absorption of certain drugs. Absolute contraindications for such drugs are complete biliary atresia, abnormal beta-lipoproteinemia, and serum TG > 4.5 mmol/L (400 mg/dL).

3. PCSK9 inhibitors

PCSK9 is a secretory serine protease synthesized by the liver, which binds to and degrades LDL receptors, thereby reducing the removal of LDL-C from serum by HDL receptors. By inhibiting PCSK9, LDL receptor degradation can be prevented and LDL-C clearance can be promoted.

The results showed that PCSK9 inhibitors, either alone or in combination with statins, significantly reduced serum LDL-C levels and improved other lipid indicators.

Preliminary clinical findings suggest that the drug reduces LDL-C by 40 to 70 percent and reduces cardiovascular events. No serious or life-threatening adverse reactions have been reported so far.

COVID-19: the turning point for gender equality

Published Online July 16, 2021 https://doi.org/10.1016/ S0140-6736(21)01651-2

The historian Walter Scheidel has argued that reductions in inequality have often emerged after war, revolution, state collapse, and plague.1 On July 12, 2021, there were more than 4 million deaths from COVID-19 globally.2 The disproportionate and unequal impact of COVID-19 on populations has brought renewed attention to deep inequalities. Will the impacts of COVID-19 galvanise efforts to reduce inequality?

One of the greatest inequalities globally is the inequity in the access to safe, effective health care without financial burden—universal health coverage (UHC). Historically, universal health reform has often been borne out of crisis. The Overseas Development Institute reported 71% of countries made progress towards UHC after episodes of “state fragility”,3 and there are plenty of examples across the continents. In 1938, after the Great Depression, New Zealand's Social Security Act began its commitment to UHC. The National Health Service in the UK was founded in 1948 in the wake of World War 2. Similarly, France and Japan enacted universal health reform after the conflict. In the aftermath of Rwanda's genocide of 1994, the country's new leadership focused on health for all and expanded UHC.4 Sri Lanka's publicly funded UHC system emerged from a devastating malaria epidemic,5 and after the 2003 SARS pandemic, China launched health-care reform to achieve universal coverage of basic health care by the end of 2020.6 In Thailand, decades of planning were realised with the launch of the Universal Coverage Scheme in 2002 after the Asian financial crisis.7 It is therefore possible that, like other crises before it, the COVID-19 pandemic could catalyse UHC reforms, should global leaders choose to harness the opportunity.8

Indeed, some countries, such as Finland and Cyprus, are implementing ambitious reforms to extend health coverage during the pandemic. In Cyprus, the second phase of their publicly financed General Health Scheme (GHS) was launched in June, 2020. These reforms are popular, attaining an 80% approval rating in a national survey.9

US President Joe Biden has a clear opportunity to expand health-care access to more people. The USA and Ireland are the only two countries in the Organisation for Economic Co-operation and Development without a universal health system. While in Ireland attempts are being made to accelerate UHC through national Sláintecare reforms,10 in the USA Biden's election stance on health care was for slow, incremental steps towards UHC.11 But now, in 2021, there is growing momentum for UHC reforms at a state level, especially in New York. Furthermore, in 2020 63% of Americans polled agreed that it is the federal government's responsibility to make sure all people have health-care coverage.12 If Biden does not harness this momentum, he could miss this unprecedented opportunity to bring UHC to the USA.

UHC is not only an option for high-income countries like the USA. Political leadership, and not a country's wealth, is a key determining factor in progress to UHC.3 Consequently, the COVID-19 crisis might provide a political window of opportunity for leaders in low-income and middle-income countries to launch UHC reforms.

South Africa is a country where some universal health reforms already came from crisis. When the African National Congress came to power in 1994, it inherited an inequitable “two-tiered” health system.13 One of the first major social policies of the then President Nelson Mandela was to launch universal free health care for pregnant women and children younger than 6 years. Yet, despite this advance, in the first decade of post-apartheid South Africa overall inequalities grew.14 After the 2019 election, the new South African Government reaffirmed its commitments to ensuring that quality health care is available to all citizens, with National Health Insurance at the centre of policy development. Although this policy enjoys considerable public support, the financing reforms needed for wealthy citizens to subsidise the rest of the population are yet to be implemented. However, there are indications that President Cyril Ramaphosa could use the COVID-19 crisis to drive through these reforms.15

COVID-19 impact on bladder cancer-orientations for diagnosing, decision making, and treatment

Thiago C Travassos, Joao Marcos Ibrahim De Oliveira, [...], and Leonardo O Reis

Introduction

The world is going through an unprecedented time in history with the arising of COVID-19. In the past, epidemics were something not as unusual as they are today, but humankind never faced a so global and widespread disease like the one caused by SARS-CoV-2.

Like many Oncology physicians, Urologists are facing and combating on two fronts: against the pandemic itself and cancer. Among all genitourinary cancers, bladder cancers are, probably, the most challenging one regarding timely decision making. Patients are usually in their late 60 s and early 70 s, more vulnerable against COVID-19, have multiple comorbidities and some will need chemotherapy.

Regarding fatality rates, bladder cancer overcomes COVID-19 by far, however, it is not the only aspect Urologist must aim at. A lot of panics have been spread in the last months, many patients are worried to be submitted to surgeries and even seeking help for health issues; and, as a consequence of it, a great percentage of them will suffer, not from the virus itself, but the consequences of it in the healthcare systems and on the psychological and dynamics of the individual and the society.

The questions are: who should be investigated and submitted to the environment of hospitals or clinics, how to investigate in the most efficient way, which patients can wait, which will need to be treated, what is the best way to treat each stage of the disease and how to proceed with these patients’ follow-up.

History has proven that pandemics have an end; but in the meantime, Urologists will have to decide which patients to expose to the health system, which ones will need more urgent intervention, and above all, to think how decisions will reflect on patients and the healthcare system in the short and long-term, with implications for years to come.

Patients with low-grade non-invasive bladder cancer have a reduced potential for disease progression when compared to patients with the muscle-invasive disease. Postponing a cystectomy surgical procedure or completing neoadjuvant chemotherapy beyond 12 weeks puts the disease’s progression and stage at risk [1].

Although follow-up cystoscopies can be performed on an outpatient basis, the risk of COVID-19 infection should not be disregarded, and the postponement of this procedure should be used to prioritize patients with a higher risk of disease progression. Regarding the previous standard follow-up, examinations of patients with non-invasive bladder cancer may be postponed, while the muscle-invasive must have a more rigorous follow-up, considering the risk-benefit of early diagnosis of relapse and thus the treatment [2].

Based on the current literature on optimal bladder cancer patients approach we comprehensively synthetize the major societies guidelines on the issue so far, adding a critical view to the topic. This article aims to guide Urologists on decision making against bladder cancer in the COVID-19 era.

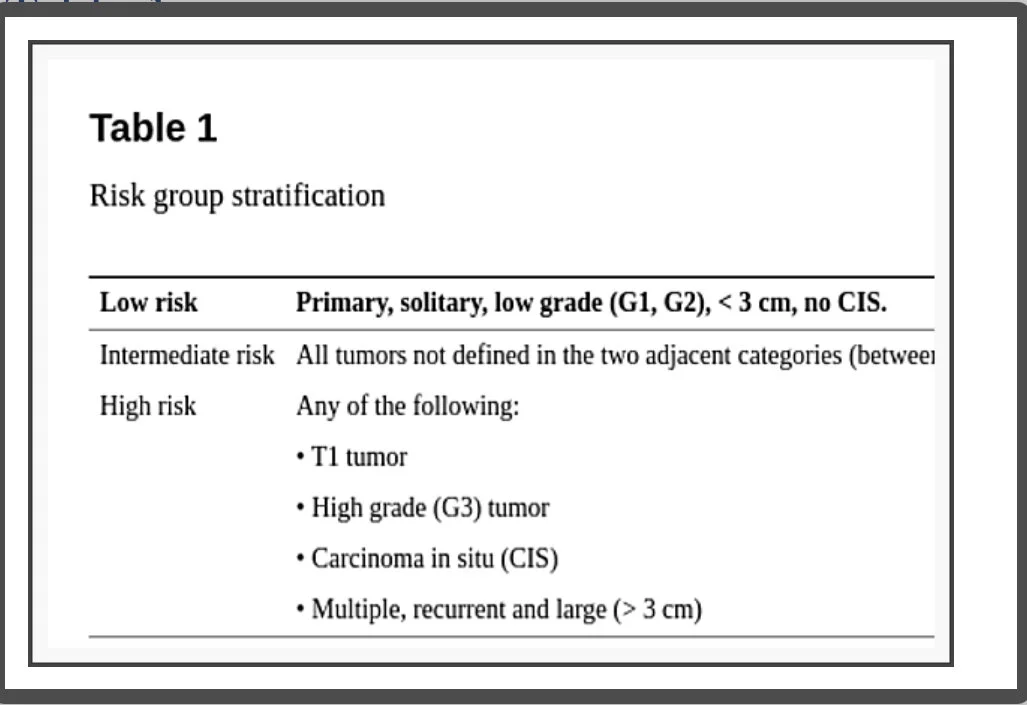

The patient approach based on data analysis and risk stratification (Table 1)

Risk group stratification

One of the worst days of patients’ lives is at cancer diagnosis and it can significantly worsen if the appropriate care access is limited. That’s the dilemma Urologists have in their hands.

The global burden of bladder cancer is significantly high, representing 3.0% of all new cancer diagnoses, 2.1% of all cancer deaths in 2018 [3], and the 10th most frequently diagnosed cancer worldwide. In Europe, the incidence and mortality rates are higher in the Southern; in 2018 the age-standardized incidence and mortality rate were 15.2 per 100,000 inhabitants and the number of deaths was 64,966 [4].

Regarding the United States (US), the annual death rate from 2013 to 2017 (adjusted to the 2000 US standard population) was 4.4 per 100,000. For 2020, the American Cancer Society estimates 81,400 cases of bladder cancer, which represents 4.5% of all new cancer cases, leading to 17,980 deaths [5].

COVID-19 data changes every day. The April-26th 2020 World Health Organization (WHO) Situation Report showed a total of 2,804,796 confirmed cases, 193,722 deaths. Spain registered 219,764 cases and 22,524 deaths and the United States 899,281 cases, with 46,204 deaths [6].

In absolute death numbers, COVID-19 beats bladder cancer by far, but the analysis of the numbers can be very confusing and uncertain because of differences in adopted models used for data measuring. Even when the correct epidemiological terms are used, the number of tests and the eligibility criteria for doing it can, alone, make fatality rates vary from 0.35% in Israel to 11% in Italy at the end of March 2020. WHO Situation Report, shows a 6.9% overall case fatality rate [7].

But how to compare all of these numbers? Once diagnosed with bladder cancer the overall case fatality rate all over the world (2012) and in the United Kingdom (2015-2017) were respectively 38% and 52%, and it is estimated to be 22% in the US for 2020. The situation can be analyzed from a different perspective. COVID-19 has, indeed, killed many people; but mostly because of its great potential of spreading (infections and cases) since its fatality rate remains low. On the other hand, when someone is diagnosed with bladder cancer the chance of dying of it can be as high as 52%, so the consequences of delaying definitive treatment might impact clinical outcomes and be felt far beyond the COVID-19 outbreak, for years to come [8].

Even so, some considerations are important for shared decision making. A pandemic outbreak puts the healthcare system under extreme pressure. Many hospitals are interrupting elective surgeries to preserve health teams, ventilators, standard rooms, and Intensive Care Unit (ICU) capacity.

The American College of Surgeons advises that decisions about proceeding with elective surgeries should not be made in isolation, but use frequently shared information systems and local resources constrain, especially protective gear for providers and patients. “This will allow providers to understand the potential impact each decision may have on limiting the hospital’s capacity to respond to the pandemic. For elective cases with a high likelihood of postoperative ICU or respirator utilization, it will be more imperative that the risk of delay to the individual patient is balanced against the imminent availability of these resources for patients with COVID-19” [9].

Patients have to be aware that surgery, even during the incubation period of COVID-19, can be a risk factor for postoperative complications, ICU need, and mortality. It’s also important to look deeply into a specific age fatality rate. In China, approximately 80% of deaths occurred in people over the 60 s. In the United States 31% of cases, 45% of hospitalizations, 53% of ICU admissions, and 80% of deaths happened among people at the age of 65 or more. Using March/2020 as a parameter, while the overall case fatality rate was 3.5%, case-fatality rates varied from 2.7 to 4.9% at 65-74 to 4.3 to 10.5% at ages 75-84, showing that age plays an important role in outcomes, especially in the age range when bladder cancer is more prevalent. Patients have to know that the real scenario, an ongoing pandemic, can be vastly underestimated and that data and protocols can change day by day [7,8].

Patient selection to undergo cystoscopy

Two different situations must be distinguished: (1) Patients not yet diagnosed with bladder cancer and (2) Follow-up patients [10].

Macroscopic hematuria is per se a very challenging symptom for Urologists as it is frightening for patients. Both truths added to COVID-19 make its management even more challenging. Although no internationally accepted uniform algorithm exists, visible hematuria always requires investigation as its presence represents a risk of about 20.4% malignancy while in microscopic hematuria this risk is around 2.7%. Cystoscopy remains the diagnostic test of choice for bladder cancer and in cases of unequivocal lesions on US or urography computed tomography the indication is to proceed immediately to transurethral resection of the bladder (TURB) [11,12].

The fact is that the ideal, painless, outpatient, flexible cystoscopies are not the reality in most developing countries where the procedures (rigid cystoscopy) occur in the operation room during hospitalization, exposing the patient, even if for a brief period, to the hospital environment.

Despite that, in the presence of macroscopic hematuria the Cleveland Clinic and the American Urological Association (AUA), recommend performing full evaluation without delay, as scheduled. In the presence of microscopic hematuria with risk factors (smoking history, occupational/chemical exposure, irritate voiding symptoms), diagnostic cystoscopy can be delayed up to 3 months unless the patient is symptomatic. If there are no risk factors, evaluation can be delayed as long as necessary [13].

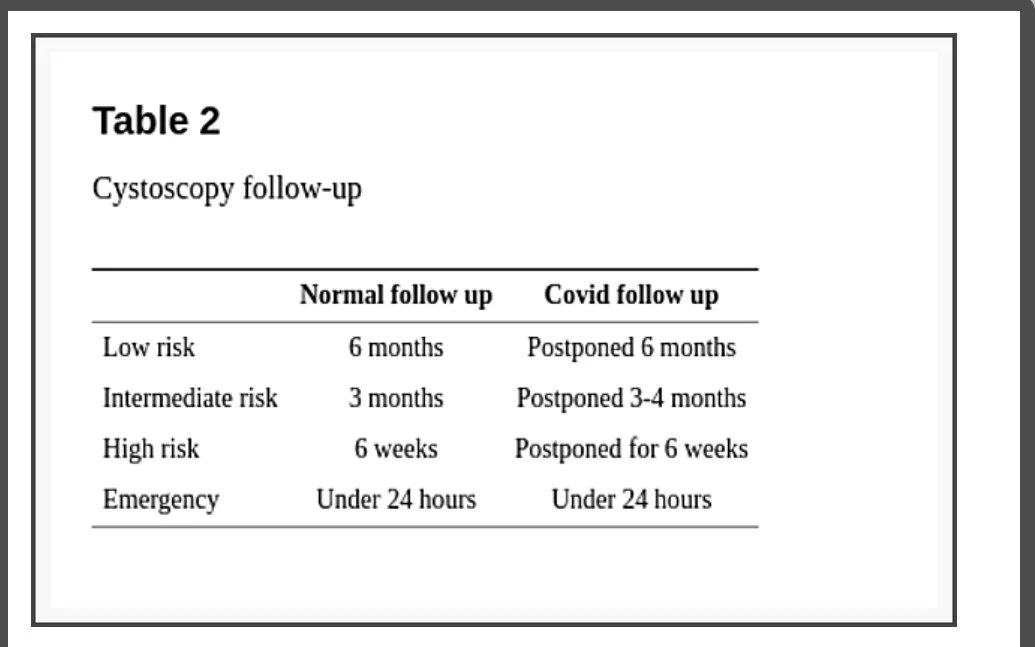

Regarding follow-up, the EAU divides situations into four levels of priority according to clinical harm (progression, metastasis, loss of renal function) risk. (1) Low: very unlikely if postponed 6 months; (2) Intermediate: possible if postponed 3-4 months, but unlikely; (3) High: clinical harm and cancer-related death very likely if postponed for more than 6 weeks; (4) Emergency: a life-threatening situation or opioid-dependent pain, treated at emergency departments despite current pandemic [14].

The recommended for low priority bladder cancer, patients with low or intermediate-risk non-muscle-invasive bladder cancer (NMIBC) without hematuria, is to defer cystoscopy by 6 months. Intermediate priority patients, with a history of high-risk NMIBC without hematuria, might be followed up before the end of 3 months. High priority patients, with NMIBC and intermittent hematuria, must undergo follow-up cystoscopy in a period inferior to 6 weeks. In case of emergencies (visible hematuria with clots, urinary retention) cystoscopy or TURB must be considered within less than 24 hours (Table 2). EAU guideline is the only one to specify the level of confidence of its recommendations (all the above-cited, level 3) [14].

Cystoscopy follow-up

AUA guideline recommends considering surveillance cystoscopy without delay for assessment of response to treatment or surveillance of high-risk NMIBC within 6 months of initial diagnosis. For high-risk NMIBC beyond 6 months of initial diagnosis, the recommendation is to consider delaying evaluation for up to 3 months. Assessment of response to treatment or surveillance of low/intermediate-risk NMIBC regardless of when the diagnosis was made may be delayed for 3 to 6 months [13].

Considerations regarding computed tomography and hematuria

All over the world, SARS-CoV-2 is putting healthcare systems under a lot of pressure, and computed tomography (CT) is being used for diagnosis, management, and follow-up of COVID-19. Although CTs, using specific urological protocols, is the gold-standard method for the investigation of most urological pathologies, this new scenario is forcing Urologists to review and adapt.

The DETECT I (Detecting Bladder Cancer Using the UroMark Test), a prospective, observational, multicentric study showed that patients with microscopic hematuria had 2.7%, 0.4%, and 0% incidence of bladder cancer, renal and upper tract urothelial cancer, respectively, and that the approach with cystoscopy and renal and bladder ultrasound (RBUS), instead of CT could be used, in cases of microscopic hematuria. On the other hand, RBUS accuracy alone to detect bladder cancer was poor with 63.6% sensitivity, reinforcing that for bladder cancer diagnosis, cystoscopy remains the gold standard and CT urography continues to be the upper tract investigation method of choice [15].

In concern to follow-up, EAU guideline is the only one that provides some guidance and recommends to defer by 6 months upper tract imaging in patients with a history of high-risk NMIBC (low priority) [14].

Therapeutical and surgical indications for patients with bladder cancer in the COVID era

AUA is following the guidance of the American College of Surgeons which devised surgeons to look at the Elective Surgery Acuity Scale (ESAS) from the St Louis University for decision making during the COVID-19 outbreak. This scale is graduated from tier 1 to 3, with subdivisions (a) and (b). Most cancers are classified as 3a (high acuity surgery/health patient) which must not be postponed. There is no specific mention regarding bladder cancer patients [16]. The Cleveland Clinic Department of Urology is more specific regarding procedures as it stratifies patients from 0 (emergency) to 4 (nonessential) and specifies surgeries; although its tables and text are very poor at explaining acronyms. In this guideline cystectomies (high-risk CA) and TURB (high-risk) are stratified as 1 (0-4) while TURB low risk, as 4 [13].

EAU guideline uses the same method for cystoscopy, strafing patients in priority categories (low, intermediate, high, and emergency) and is more specific and complete [14]. We will summarize all recommendations, dividing the disease in its classic risk stratification, using, mainly, EAU guideline, because of its consistency. It is not the scope of this article to discourse about the TNM staging system and it will be used as in EAU guideline. Also, important to say that most of the statements were directly extracted from the guideline but rearranged, in a more didactic way.

Non-muscle-invasive bladder cancer

According to EAU guideline [14] TURB can be delayed by 6 months in patients with small papillary recurrence/s (less than 1 cm) and a history of Ta/1 low-grade tumors. If feasible maybe just followed or fulgurated during office cystoscopy. The second TURB in patients with visibly complete initial TURB of T1 lesion with muscle in the specimen can also be deferred by 6 months. In these cases, TURB also can be postponed after Bacillus Calmette-Guérin (BCG) intravesical instillations.

Patients with any primary tumor or recurrent papillary tumor greater than 1 cm and without hematuria or history of high-risk NMIBC must be treated before the end of 3 months. In cases with bladder lesions and intermittent macroscopic hematuria or a history of high-risk NMIBC, TURB must be done within less than 6 weeks. All patients confirmed or suspected of bladder cancer presenting macroscopic hematuria with clot retention or/and requiring bladder catheterization ought to be treated in a time inferior to 24 hours. Any patient with the highest-risk NMIBC must be considered for immediate radical cystectomy.

Muscle invasive bladder cancer

According to EAU guideline [14] In cases when Muscle Invasive Bladder Cancer (MIBC) is diagnosed, staging imaging by CT thorax-abdomen-pelvis should not be delayed. On the other hand, in cases when images are suspicious for invasive tumors, TURB must be performed. In both cases, CT or TURB must be done in 6 weeks.

The proven benefit of neoadjuvant chemotherapy (NAC) in T2 tumors, is limited and has to be weighed against the risks, especially in patients with a short life expectancy and those with (pulmonary and cardiac) comorbidity. The same is valid for focal T3 N0M0 tumors. That cisplatin eligible, high burden T3/T4 N0M0, NAC risk should be individualized while they are on the waiting list, but treatment should be offered before 6 weeks. All inclusion in chemotherapy trials must be delayed, except for cisplatin eligible patients.

Regarding radical cystectomy (RC), it can be offered for patients with T2-T4a, N0M0 tumors and must be performed before the end of 3 months. Once RC is scheduled the urinary diversion or organ-preserving techniques should be done as would be planed outside this crisis period. Multimodality bladder sparing therapy can be considered for selected T2N0M0 patients [17]. In cases where palliative cystectomy is considered as intractable hematuria with anemia, alternatives such as radiotherapy (RT), with or without chemotherapy, must be discussed. In the presence of anemia, it is important to start treatment in less than 24 hours, other palliative cases may wait not more than 6 weeks.

Adjuvant cisplatin-based chemotherapy can be offered to those patients with pT3/4 and/or pN+ disease if no NAC has been given and must be started in less than 6 weeks.

Metastatic bladder cancer

Oncologists play a central role to advise the feasibility of non-surgical treatments like radiotherapy or chemotherapy in the management of malignancy mainly in these uncertain times the world is facing [17]. In the words of Dr. Thomas Powles, Professor of Genitourinary Oncology and Director of the Barts Cancer Centre in London, “(…) if you’re going to give chemotherapy today (…) Don’t think about what it looks like today. Think what might it look like in two weeks before you push the patient into that swimming pool, not knowing how long they’re going to be under the water for” [18].

The key point is to assess risk and benefit individually in each patient. In asymptomatic patients with low disease burden, the first-line therapy can, in selected cases, be postponed to 8-12 weeks under clinical surveillance. EAU guideline recommends the use of cisplatin-containing combination chemotherapy with GC (gemcitabine plus cisplatin), MVAC (methotrexate, vinblastine, adriamycin plus cisplatin), preferably with G-CSF (granulocyte colony-stimulating factor), HD-MVAC (high dose-MVAC) with G-CSF or PCG (paclitaxel, cisplatin, gemcitabine). In this period (inferior to 3 months) offer checkpoint inhibitors pembrolizumab or atezolizumab depending on PD-L1 (programmed death-ligand 1) status [14].

In symptomatic metastatic patients, the benefit of treatment is likely higher than the risk and these patients should initiate treatment within less than 6 weeks. Supportive measures such as the use of GCSF should be considered. The recommendations are the same for the asymptomatic patients being treated. As second-line therapy, checkpoint inhibitor pembrolizumab can the offered to patients progressing during, or after, platinum-based combination chemotherapy for metastatic disease. Alternatively, offering treatment within a clinical trial setting can be an option [14].

Supportive care

The EAU guideline recommends that acute renal failure, for locally advanced bladder cancer, must be treated with nephrostomy at ambulatory settings [14]; not a reality for most developing countries in the world, where nephrostomies are done in the operating rooms with improvised materials. Embolization or hemostatic RT ought to be considered for bleeding with hemodynamic repercussion.

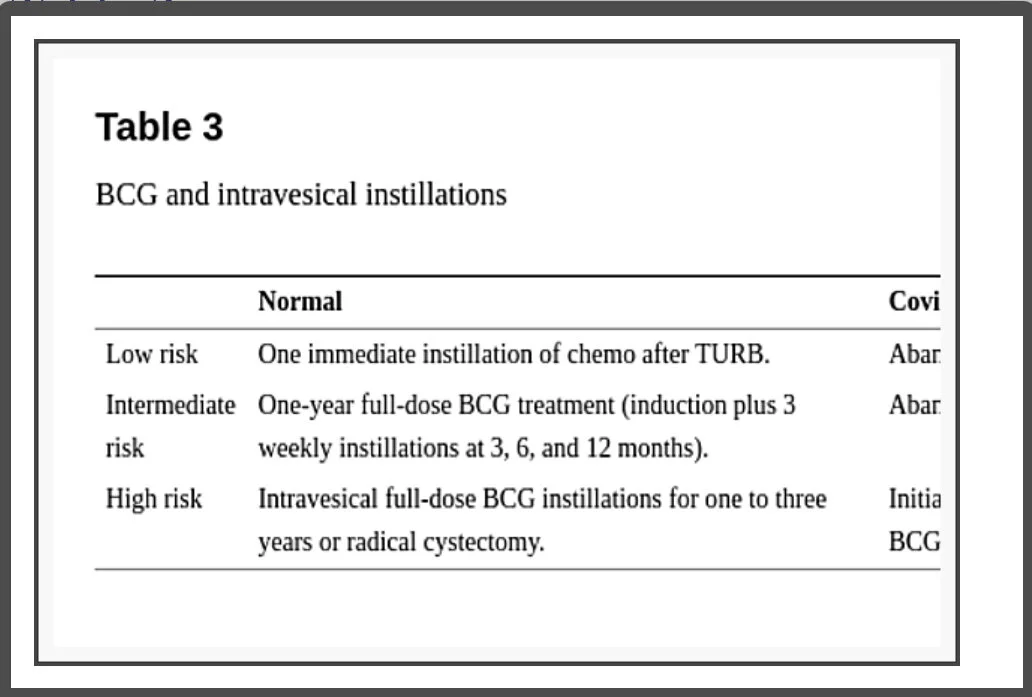

Considerations regarding BCG and intravesical instillations (Table 3)

BCG and intravesical instillations

There is no evidence that patients receiving intravesical BCG have a higher risk of COVID-19 and the recommendation for not initiating, stopping, or postponing are mainly because the risk of contracting the virus when going to a health care facility might overcome the risk of delaying doses for some time.

EAU guidelines orientate to abandon early postoperative instillation of chemotherapy in presumably low or intermediate-risk tumors as in confirmed intermediate-risk NMIBC. In high-risk NMIBC the recommendation is to initiate treatment within less than 6 weeks with intravesical BCG immunotherapy with one-year maintenance [15]. AUA guideline recommends that, for intermediate and high-risk NMIBC, induction BCG should be prioritized, once induction provides a significant benefit by reducing disease recurrence and progression; though they may also require a delay in therapy depending on local needs and resources. On the other hand, maintenance intravesical BCG should be stopped and reevaluated in 3 months for high-risk and delayed indefinitely in intermediate-risk because the most significant impact of the intravesical treatment is obtained during the induction course [13].

Does BCG immunotherapy impact COVID-19? A study including 40 individuals that received the trivalent influenza vaccine, 14 days after randomly (20 × 20) being injected with BCG or placebo shows potential [19]; there is a therapeutic (https://clinicaltrials.gov/ct2/show/NCT04369794) and some preventive trials underway studying the effect of BCG vaccination on increasing resistance to infection and preventing severe COVID-19 infection. Also, regarding intravesical BCG potential cross-protection against COVID-19, considering its T cell boosting mechanisms, we are starting the “Global Inquire of Intravesical BCG As Not Tumor but COVID-19 Solution” - “GIANTS” trial.

Considerations regarding surgical procedures

Viral RNA has been detected in feces from day 5 up to 4-5 weeks after symptoms in moderate cases. Wang et al., investigated the biodistribution of SARS-CoV-2 in different tissues, collecting 1,070 specimens from 205 patients with COVID-19. Of the feces samples, 29% tested positive compared to only 1% (3 of 307) blood samples, and none of the 72 urine samples, confirmed by another recent study where the virus was not detectable in the urine of tested patients [20,21]. These data have clear implications for surgeons when considering radical cystectomy and make TURB a more comfortable environment. Whenever possible, Urologist should test their patients for SARS-CoV-2 48 hours before surgery, not a very feasible reality in most developing countries.

Regarding laparoscopic surgery, many considerations can be done and full discussion of it is far beyond the objective of this article, even though some are worth discussing. Despite no conclusive evidence regarding the difference in risks of viral transmission, of open and laparoscopic surgery for the surgical team, the last one can be associated with a higher amount of smoke particles in the operation room. Recommended modifications are to keep intraperitoneal pressure as low as possible, to aspirate the inflated CO2 before removing the trocars, and to lower electrocautery power settings. Laparoscopic procedures, due to the lack of evidence for not performing it during the COVID-19 outbreak, must be considered as any minimally invasive procedure, that might be associated with shorter length of hospital stay, use of resources, and personal [22].

For robotic surgeries, the same cautions must be taken with some particularities, as avoiding using two-way pneumoperitoneum insufflators to prevent pathogens colonization of circulating aerosol in the pneumoperitoneum circuit or the insufflator. These integrated flow systems need to be configured in a continuous smoke evacuation and filtration mode. Aerosol dispersal is even more important when concerning robotic surgeries because of the common need of a not exchangeable operating room where the mobile cart, the image cart, and the console are at more risk of contamination due to particles in the air [23].

Conclusions

When comparing diseases and their epidemiological impacts, the mortality rates are a very common thermometer to help healthcare administrators and physicians on decision making, but when facing a novel disease as COVID-19, there has not been enough time to evaluate the damage regarding mortality. When looking at the fatality rates, bladder cancer overcomes COVID-19 by far and can be as high as 52%, so Urologists must not postpone investigation. Cystoscopy remains the gold standard for the investigation of bladder cancer and CT urography for obtaining images of the upper tract in cases of macroscopic hematuria. The use of ultrasound is reserved for imaging the upper tract in cases of microscopic hematuria. EAU guideline provides the most specific orientations in cases of bladder cancer and they are summarized above. Whenever TURB is necessary, extra care must be taken to assure muscle sample, avoiding another surgical intervention and hospitalization, but when necessary it should not be postponed due to the elevated progression rate of the disease. With this study, we can propose a new way to perform the screening and monitoring of bladder cancer, considering the aggressiveness and the capacity to progress depending on its stage. Follow-up cystoscopies can be postponed for 6 months for low risk, 3 months for intermediate, 6 weeks for high risk, and no longer than 24 hours in case of emergencies such as life-threatening hematuria, anemia, and urinary retention. This innovation in monitoring can bring greater safety to the patient, especially in those at higher risk, prioritizing and preventing the progression of the disease and guaranteeing their agile treatment when necessary, since the centers will have more time to schedule cystoscopies during pandemics. Regarding chemotherapy, more than ever the key point is to evaluate each case individually. BCG must be considered only as an inducing course, in selected intermediate and most high-risk cancers; all others should be stopped. Whenever possible patients should be tested before surgery.

Research involving human participants: The authors certify that the study was performed under the ethical standards as laid down in the 1964 Declaration of Helsinki and its later amendments or comparable ethical standards.

Developing a New Medicine or Device

secondscount.org

There are five basic stages of development that a novel treatment must pass through before approval. Many good ideas for novel treatments don't even make it through these stages due to a variety of factors such as incomplete paperwork or lack of funding. A collaboration involving researchers, doctors, regulators and the private company that owns the patent on the medication or device determine how the trials are set up. Although clinical trials for device approval typically require enrolling fewer patients than do drug trials, this gap has been narrowing as device trials are subject to increasingly rigorous scientific standards.

The Five Stages of Development

The five key stages of clinical trials that a novel device or medication must pass through before - and after - it reaches the market include:

Preclinical